-

Type:

Bug

-

Resolution: Fixed

-

Priority:

High

-

Affects Version/s: 9.2.1, 9.2.3, 9.2.4

-

Component/s: Core - Analytics

-

None

-

25

-

Severity 3 - Minor

-

116

Issue Summary

Confluence Analytics keep sending request to Amazon S3 bucket to upload some analytics data.

Disabling this either using the UI, or dark feature is not honored by Confluence and Confluence still tries to reach the AWS S3 endpoints. When Confluence is in a private network, the requests show the below stack trace. This also, causing the logs being spam with the stack trace.

Steps to Reproduce

- Setup Confluence 9.2.0

- The analytics plugin was enabled by default

- Create multiple spaces (around 3-5 spaces)

- Create a few pages (around 3-5 pages)

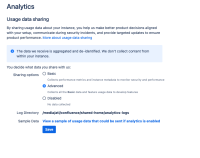

- Disable Analytics usage data sharing:

- Method-1: General Configuration > Analytics and select 'Disabled'.

- Method-2:

- Stop Confluence

- Add the following JVM argument to disable the com.atlassian.analytics.essential.supported dark feature

-Dcom.atlassian.analytics.essential.supported=false

- Ensure your Confluence server/machine is not connected to the internet/Wifi

- Start Confluence

- Tail the logs and we can see the Confluence still triggers the requests to Amazon S3 bucket event the usage sharing is disabled

2025-04-18 16:12:35,989 ERROR [Caesium-1-4] [impl.schedule.caesium.JobRunnerWrapper] runJob Scheduled job class com.atlassian.analytics.client.upload.RemoteFilterRead_JobHandlerKey#com.atlassian.analytics.client.upload.RemoteFilterRead:job failed to run io.atlassian.util.concurrent.LazyReference$InitializationException: software.amazon.awssdk.core.exception.SdkClientException: Received an UnknownHostException when attempting to interact with a service. See cause for the exact endpoint that is failing to resolve. If this is happening on an endpoint that previously worked, there may be a network connectivity issue or your DNS cache could be storing endpoints for too long. Caused by: software.amazon.awssdk.core.exception.SdkClientException: Received an UnknownHostException when attempting to interact with a service. See cause for the exact endpoint that is failing to resolve. If this is happening on an endpoint that previously worked, there may be a network connectivity issue or your DNS cache could be storing endpoints for too long. at software.amazon.awssdk.core.exception.SdkClientException$BuilderImpl.build(SdkClientException.java:111) Caused by: software.amazon.awssdk.core.exception.SdkClientException: Unable to execute HTTP request: btf-analytics.s3.dualstack.us-east-1.amazonaws.com at software.amazon.awssdk.core.exception.SdkClientException$BuilderImpl.build(SdkClientException.java:111) Suppressed: software.amazon.awssdk.core.exception.SdkClientException: Request attempt 1 failure: Unable to execute HTTP request: btf-analytics.s3.dualstack.us-east-1.amazonaws.com: nodename nor servname provided, or not known Suppressed: software.amazon.awssdk.core.exception.SdkClientException: Request attempt 2 failure: Unable to execute HTTP request: btf-analytics.s3.dualstack.us-east-1.amazonaws.com Suppressed: software.amazon.awssdk.core.exception.SdkClientException: Request attempt 3 failure: Unable to execute HTTP request: btf-analytics.s3.dualstack.us-east-1.amazonaws.com Caused by: java.net.UnknownHostException: btf-analytics.s3.dualstack.us-east-1.amazonaws.com

2025-05-23 06:39:54,601 ERROR [analyticsEventProcessor:thread-1] [analytics.client.pipeline.DefaultAnalyticsPipeline] lambda$createTask$0 Failed to send analytics event com.atlassian.analytics.api.events.MauEvent@8fae475 com.atlassian.cache.CacheException: io.atlassian.util.concurrent.LazyReference$InitializationException: software.amazon.awssdk.core.exception.SdkClientException: Unable to execute HTTP request: Connect to btf-analytics.s3.dualstack.us-east-1.amazonaws.com:443 [btf-analytics.s3.dualstack.us-east-1.amazonaws.com/16.182.104.194, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/52.217.136.146, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/52.217.37.80, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/16.182.64.226, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/52.216.214.186, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/3.5.17.66, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/52.216.139.6, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/52.216.154.176, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/2600:1fa0:8193:b9f0:10b6:6be2:0:0, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/2600:1fa0:816f:9ba1:34d9:775a:0:0, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/2600:1fa0:814f:f490:36e7:81a2:0:0, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/2600:1fa0:80dc:5011:34d9:47a8:0:0, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/2600:1fa0:81ef:9ea9:34d8:d29a:0:0, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/2600:1fa0:8193:aea0:10b6:6102:0:0, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/2600:1fa0:807b:eec8:34d9:ad52:0:0, btf-analytics.s3.dualstack.us-east-1.amazonaws.com/2600:1fa0:81eb:a8e0:34d8:d8c2:0:0] failed: Connect timed out at com.atlassian.cache.ehcache.DelegatingCache.get(DelegatingCache.java:114) at com.atlassian.cache.impl.metrics.InstrumentedCache.get(InstrumentedCache.java:72) at com.atlassian.confluence.cache.ehcache.DefaultConfluenceEhCache.get(DefaultConfluenceEhCache.java:39) at com.atlassian.analytics.client.pipeline.serialize.properties.extractors.mau.MauService.hashEmailPropertyForMauEvent(MauService.java:44) [...] at software.amazon.awssdk.core.internal.http.pipeline.stages.ApiCallAttemptMetricCollectionStage.execute(ApiCallAttemptMetricCollectionStage.java:39) at software.amazon.awssdk.core.internal.http.pipeline.stages.RetryableStage2.executeRequest(RetryableStage2.java:93) at software.amazon.awssdk.core.internal.http.pipeline.stages.RetryableStage2.execute(RetryableStage2.java:56) ... 55 more Caused by: java.net.SocketTimeoutException: Connect timed out at java.base/sun.nio.ch.NioSocketImpl.timedFinishConnect(Unknown Source) at java.base/sun.nio.ch.NioSocketImpl.connect(Unknown Source) at java.base/java.net.SocksSocketImpl.connect(Unknown Source) at java.base/java.net.Socket.connect(Unknown Source) at org.apache.http.conn.ssl.SSLConnectionSocketFactory.connectSocket(SSLConnectionSocketFactory.java:368) at software.amazon.awssdk.http.apache.internal.conn.SdkTlsSocketFactory.connectSocket(SdkTlsSocketFactory.java:63) at org.apache.http.impl.conn.DefaultHttpClientConnectionOperator.connect(DefaultHttpClientConnectionOperator.java:142) ... 84 more

Expected Results

We would expect Confluence to stop triggering requests to the S3 bucket when the data sharing feature is disabled.

Actual Results

Confluence is still triggering requests to the S3 bucket when the data sharing feature is disabled.

Workaround

Method 1

Increase the logging level for com.atlassian.analytics.client.pipeline.DefaultAnalyticsPipeline class by updating the log4j.properties as given below:

log4j.logger.com.atlassian.analytics.client.pipeline.DefaultAnalyticsPipeline=FATAL

![]() Doing this does not disable Analytics from trying to send to the Amazon S3 endpoint, even though they are blocked in your network. It only hides these errors from the logs.

Doing this does not disable Analytics from trying to send to the Amazon S3 endpoint, even though they are blocked in your network. It only hides these errors from the logs.

Method 2

- Ensure Confluence still triggers the request from the logs

- Stop Confluence

- Run the following query in the database to disable the plugin

update BANDANA set BANDANAVALUE='<map> <entry> <string>com.atlassian.analytics.analytics-client</string> <boolean>false</boolean> </entry> </map>' where BANDANAKEY='plugin.manager.state.Map'; - Ensure the Confluence server/machine is not connected to the internet/wifi

- Start Confluence

- Monitor the logs, and we can see that the request to the S3 bucket is no longer being triggered

- is related to

-

JRASERVER-70361 Jira have outgoing connections even though disabled Analytics

-

- Closed

-

- mentioned in

-

Page Loading...

- relates to

-

JSMDC-21724 Loading...