-

Type:

Bug

-

Resolution: Incorrectly Filed

-

Priority:

Medium

-

None

-

Affects Version/s: 7.15.1, 7.17.2, 7.19.5

-

Component/s: Server - Performance

-

30

-

Severity 3 - Minor

-

64

Issue Summary

When a node is down for time enough to have a lot of new content to reindex, the incremental index will fill the heap and can lead to OutOfMemory errors. Confluence's Full GC won't be able to handle the load, causing slowness not only in the node but the whole cluster.

Steps to Reproduce

- Create a cluster with at least 2 nodes;

- Create some content and make sure both nodes are indexing properly;

- Check if <confluence-home>/journal/main_index and <confluence-home>/journal/change_index files have values added

- Make sure the JOURNALENTRY table has main_index and change_index values added to the journal_name column;

- Remove one node of the cluster, for example, node 2;

- Add a lot of content in the node that remains in the cluster, in this example, node 1;

- This issue was replicable with 6 million and 100k changes already;

- Bring back node 2 to the cluster;

- Node 2 will attempt to reindex the content that was created/updated while it was offline and trigger an incremental index;

Expected Results

Indexing the content created without affecting the performance of the node or the cluster.

Actual Results

The performance of the node and the cluster are affected. The following entries can be seen in the atlassian-confluence.log* file:

2022-04-11 13:23:07,662 WARN [hz.confluence.event-3] [cache.hazelcast.asyncinvalidation.SequenceTracker] isNextInSequence Invalidation sequence number 2682 is out sequence with last known 0 from Member [10.46.69.130]:5801 - 84c9d3ab-f96a-4b41-95e5-79ea943ae7ff 2022-04-11 13:23:07,668 WARN [hz.confluence.event-3] [confluence.cache.hazelcast.AsyncInvalidationCacheFactory] cacheInvalidationOutOfSequence Cache flushed due to out-of-sequence invalidation: 'com.atlassian.confluence.pages.persistence.dao.PageDao.getPage' 2022-04-11 13:23:07,931 WARN [hz.confluence.event-4] [cache.hazelcast.asyncinvalidation.CacheInvalidationSequenceSnapshotVerifier] verifyMinimumSequenceNumber Minimum sequence number 47 for Member [10.46.69.130]:5801 - 84c9d3ab-f96a-4b41-95e5-79ea943ae7ff was not met, flushing cache 'com.atlassian.confluence.mail.notification.persistence.NotificationDao.isUserWatchingContent' 2022-04-11 13:23:07,931 WARN [hz.confluence.event-4] [confluence.cache.hazelcast.AsyncInvalidationCacheFactory] sequenceSnapshotInconsistent Cache flushed due to inconsistent sequence snapshot: 'com.atlassian.confluence.mail.notification.persistence.NotificationDao.isUserWatchingContent' 2022-04-11 13:23:07,933 WARN [hz.confluence.event-4] [cache.hazelcast.asyncinvalidation.CacheInvalidationSequenceSnapshotVerifier] verifyMinimumSequenceNumber Minimum sequence number 58 for Member [10.46.69.130]:5801 - 84c9d3ab-f96a-4b41-95e5-79ea943ae7ff was not met, flushing cache 'com.atlassian.confluence.core.DefaultContentPropertyManager' 2022-04-11 13:23:07,933 WARN [hz.confluence.event-4] [confluence.cache.hazelcast.AsyncInvalidationCacheFactory] sequenceSnapshotInconsistent Cache flushed due to inconsistent sequence snapshot: 'com.atlassian.confluence.core.DefaultContentPropertyManager' 2022-04-11 13:23:07,933 WARN [hz.confluence.event-4] [cache.hazelcast.asyncinvalidation.CacheInvalidationSequenceSnapshotVerifier] verifyMinimumSequenceNumber Minimum sequence number 14 for Member [10.46.69.130]:5801 - 84c9d3ab-f96a-4b41-95e5-79ea943ae7ff was not met, flushing cache 'com.atlassian.confluence.pages.attachments.AttachmentCache' 2022-04-11 13:23:07,933 WARN [hz.confluence.event-4] [confluence.cache.hazelcast.AsyncInvalidationCacheFactory] sequenceSnapshotInconsistent Cache flushed due to inconsistent sequence snapshot: 'com.atlassian.confluence.pages.attachments.AttachmentCache' 2022-04-11 13:23:07,934 WARN [hz.confluence.event-4] [cache.hazelcast.asyncinvalidation.CacheInvalidationSequenceSnapshotVerifier] verifyMinimumSequenceNumber Minimum sequence number 127 for Member [10.46.69.130]:5801 - 84c9d3ab-f96a-4b41-95e5-79ea943ae7ff was not met, flushing cache 'com.atlassian.confluence.pages.AttachmentDownloadPathCache' 2022-04-11 13:23:07,934 WARN [hz.confluence.event-4] [confluence.cache.hazelcast.AsyncInvalidationCacheFactory] sequenceSnapshotInconsistent Cache flushed due to inconsistent sequence snapshot: 'com.atlassian.confluence.pages.AttachmentDownloadPathCache' 2022-04-11 13:23:08,287 WARN [hz.confluence.event-3] [cache.hazelcast.asyncinvalidation.SequenceTracker] isNextInSequence Invalidation sequence number 984 is out sequence with last known 0 from Member [10.46.69.131]:5801 - a0a0b1c5-5146-4bcc-9ffb-6daf77686be0 2022-04-11 13:23:08,287 WARN [hz.confluence.event-3] [confluence.cache.hazelcast.AsyncInvalidationCacheFactory] cacheInvalidationOutOfSequence Cache flushed due to out-of-sequence invalidation: 'com.atlassian.confluence.pages.persistence.dao.PageDao.getPage'

We also see the Full GCs triggering Allocation Failure messages:

2022-04-14T18:37:47.455+0000: 1018.951: [Full GC (Allocation Failure) 4077M->3939M(4096M), 11.7710536 secs] 2022-04-14T18:37:59.229+0000: 1030.724: [GC concurrent-mark-abort] 2022-04-14T18:38:00.007+0000: 1031.503: [GC pause (G1 Evacuation Pause) (young)-- 4077M->4075M(4096M), 0.3879943 secs] 2022-04-14T18:38:00.423+0000: 1031.919: [GC pause (G1 Evacuation Pause) (young) (initial-mark)-- 4077M->4077M(4096M), 0.1002985 secs] 2022-04-14T18:38:00.523+0000: 1032.019: [GC concurrent-root-region-scan-start] 2022-04-14T18:38:00.524+0000: 1032.020: [GC concurrent-root-region-scan-end, 0.0006556 secs] 2022-04-14T18:38:00.524+0000: 1032.020: [GC concurrent-mark-start] 2022-04-14T18:38:00.529+0000: 1032.025: [GC pause (G1 Evacuation Pause) (young) 4077M->4077M(4096M), 0.0254765 secs] 2022-04-14T18:38:00.567+0000: 1032.063: [Full GC (Allocation Failure) 4077M->3944M(4096M), 11.4921629 secs] 2022-04-14T18:38:12.059+0000: 1043.555: [GC concurrent-mark-abort] 2022-04-14T18:38:12.605+0000: 1044.100: [GC pause (G1 Evacuation Pause) (young)-- 4078M->4076M(4096M), 0.3788891 secs] 2022-04-14T18:38:13.000+0000: 1044.496: [GC pause (G1 Evacuation Pause) (young) (initial-mark)-- 4078M->4078M(4096M), 0.0758366 secs] 2022-04-14T18:38:13.077+0000: 1044.572: [GC concurrent-root-region-scan-start] 2022-04-14T18:38:13.077+0000: 1044.573: [GC concurrent-root-region-scan-end, 0.0006408 secs] 2022-04-14T18:38:13.077+0000: 1044.573: [GC concurrent-mark-start] 2022-04-14T18:38:13.078+0000: 1044.574: [GC pause (G1 Evacuation Pause) (young) 4078M->4078M(4096M), 0.0251643 secs]

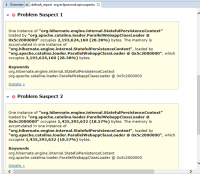

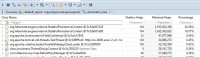

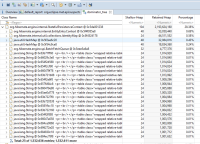

In the heap dump, we can see that the incremental index has brought a lot of content into memory, according to the screenshots attached to this bug.

Workaround

Compare the JOURNALENTRY table entries with the content in the <confluence-home>/journal/main_index file:

select * from journalentry; select * from journalentry where entry_id > main_index_value;

If the delta of those results is bigger than 10k, we will most likely have performance issues while indexing the new content. We can copy and paste the index of a healthy node into a node that is behind on the content created to prevent the incremental index from being triggered:

- Stop the working node;

- Copy the confluence-home/journal and confluence-home/index folders of it;

- Start the working node;

- Stop the faulty node;

- Delete the current confluence-home/journal and confluence-home/index folders from the faulty node;

- Paste the copied confluence-home/journal and confluence-home/index folders from the working node into it;

- When the nodes in the cluster are stable, start the node that is not working;

![]() We can also use the https://bitbucket.org/goodsoftwareco/good-confluence-data-generator/downloads/ app to create a large amount of content in Confluence and replicate this issue.

We can also use the https://bitbucket.org/goodsoftwareco/good-confluence-data-generator/downloads/ app to create a large amount of content in Confluence and replicate this issue.

Note

This behavior was only replicated when the incremental index is used to update the local index on startup. If the index recovery or full rebuild is triggered instead, the problem is not observed. To check which one will be used, proceed as follows before starting the node:

- Get the journal ID from <Confluence-Local-Home>/journal/main_index on the node that is about to be started

- Replace that ID on the following query and run it:

select * from journalentry where entry_id = <main_index_id>;

If it finds the ID on the database, the incremental index will be used and it is recommended to run the workaround steps above before starting.

If the ID is not found, index recovery or a full rebuild will be performed on startup.

- relates to

-

CONFSERVER-83197 Confluence crashes with out of memory (OOM) error during flushQueue shortly after startup

-

- Closed

-

- mentioned in

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...