-

Type:

Bug

-

Resolution: Fixed

-

Priority:

Highest

-

Affects Version/s: 6.13.23, 8.1.0, 7.19.6, 7.19.7

-

Component/s: Search - Indexing, Server - Performance

-

23

-

Severity 2 - Major

-

198

Issue Summary

A Confluence instance will run out of memory and crash shortly after startup while attempting to catch up on index backlog.

This issue affects Confluence Server, Confluence single node data center or Confluence multi-node data center instances.

Steps to Reproduce

Example scenario to reproduce the issue in a multi-node DC setup:

- Create a Confluence DC cluster with at least 2 nodes.

- Create some content and make sure both nodes are indexing properly.

- Directly on both nodes:

- Check Confluence Administration » General Configuration » Content Indexing

- Check that both Content queue and Change queue show No items in the queue

- Check Confluence Administration » General Configuration » Content Indexing

- Shutdown Node 2.

- Add a lot of content to Node 1 that remains in the cluster.

- This includes but is not limited to any of the following scenarios:

- 7000 page creations with content size of 900KB with the parent page being the previously created new page (i.e. end up with a page tree depth of 7000);

- 7000 page creations with content size of 900KB across 7000 different Spaces.

- This includes but is not limited to any of the following scenarios:

- Bring back Node 2 to the cluster (with Max Heap Xmx 6GB).

- Node 2 will attempt to flush index to catch up on the Content indexing since it was last shut down.

Expected Results

Noting the default index.queue.batch.size value of 1500 items to index at a time:

- Recognized System Properties

index.queue.batch.size Size of batches used by the indexer. Reducing this value will reduce the load that the indexer puts on the system, but indexing takes longer. Increasing this value will cause indexing to be completed faster, but puts a higher load on the system. Normally this setting does not need tuning.

The node should start up, catch up with the Content Indexing without excessive memory use and form a stable cluster.

Actual Results

After 5-15 minutes, the just started Node 2 will encounter an Out of Memory error and crash ![]() .

.

The following entries can eventually be seen in the atlassian-confluence.log file, 5-15 minutes after startup:

2023-04-17 13:05:55,350 INFO [Catalina-utility-1] [com.atlassian.confluence.lifecycle] contextInitialized Starting Confluence 7.19.6 [build 8804 based on commit hash 739c878e343cec3da85b03d89ce8a1c38cb15fbc] - synchrony version 5.0.3 ... 2023-04-17 13:12:13,321 ERROR ... java.lang.OutOfMemoryError: Java heap space ...

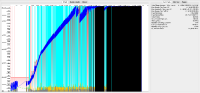

The GC logs will trend upwards immediately after startup:

![]() In Confluence 7.8.x and older, only the Edge index is auto-flushed on startup. Main Index is flushed upon a Lucene change event (e.g. page edit)

In Confluence 7.8.x and older, only the Edge index is auto-flushed on startup. Main Index is flushed upon a Lucene change event (e.g. page edit)

![]() In Confluence 7.9.0 and newer, both Edge Index and Main Index are auto-flushed immediately on startup

In Confluence 7.9.0 and newer, both Edge Index and Main Index are auto-flushed immediately on startup

CPU and Thread Dumps captured every 5s immediately after startup show the presence of Caesium thread executing flushQueue > processEntries:

"Caesium-1-2" #318 daemon prio=1 os_prio=31 cpu=69507.32ms elapsed=368.23s tid=0x00000002d9037000 nid=0x31707 runnable [0x00000002f620e000] java.lang.Thread.State: RUNNABLE ... at com.atlassian.confluence.core.ContentEntityObject.getBodyContent(ContentEntityObject.java:256) at com.atlassian.confluence.core.ContentEntityObject.getBodyAsStringWithoutMarkup(ContentEntityObject.java:982) at com.atlassian.confluence.core.ContentEntityObject.getExcerpt(ContentEntityObject.java:1008) at com.atlassian.confluence.search.lucene.extractor.ContentEntityMetadataExtractor.extractExcerpt(ContentEntityMetadataExtractor.java:49) at com.atlassian.confluence.search.lucene.extractor.ContentEntityMetadataExtractor.addFields(ContentEntityMetadataExtractor.java:39) at com.atlassian.confluence.internal.index.lucene.LuceneChangeExtractor.invokeExtractors(LuceneChangeExtractor.java:112) at com.atlassian.confluence.internal.index.lucene.LuceneChangeExtractor.extractFields(LuceneChangeExtractor.java:74) at com.atlassian.confluence.internal.index.v2.CompositeExtractor.extract(CompositeExtractor.java:43) at com.atlassian.confluence.internal.index.v2.Extractor2DocumentBuilder.build(Extractor2DocumentBuilder.java:48) at com.atlassian.confluence.internal.index.v2.AtlassianChangeDocumentBuilder.build(AtlassianChangeDocumentBuilder.java:35) at com.atlassian.confluence.internal.index.v2.AtlassianChangeDocumentBuilder.build(AtlassianChangeDocumentBuilder.java:14) at com.atlassian.confluence.search.lucene.tasks.AddChangeDocumentIndexTask.perform(AddChangeDocumentIndexTask.java:83) ... at com.atlassian.confluence.impl.journal.DefaultJournalManager.processNewEntries(DefaultJournalManager.java:81) at com.atlassian.confluence.impl.journal.DefaultJournalService.processEntries(DefaultJournalService.java:40) at com.atlassian.confluence.api.service.journal.JournalService.processNewEntries(JournalService.java:100) at com.atlassian.confluence.search.queue.AbstractJournalIndexTaskQueue.flushQueue(AbstractJournalIndexTaskQueue.java:179) at com.atlassian.confluence.search.IndexTaskQueue.flushAndExecute(IndexTaskQueue.java:146) ... at com.atlassian.confluence.impl.search.IndexFlushScheduler.lambda$new$1(IndexFlushScheduler.java:122) at com.atlassian.confluence.impl.search.IndexFlushScheduler$$Lambda$807/0x0000000800ee5040.runJob(Unknown Source) ... at com.atlassian.scheduler.core.JobLauncher.launch(JobLauncher.java:90) at com.atlassian.scheduler.caesium.impl.CaesiumSchedulerService.launchJob(CaesiumSchedulerService.java:464) at com.atlassian.scheduler.caesium.impl.CaesiumSchedulerService.executeLocalJob(CaesiumSchedulerService.java:431) at com.atlassian.scheduler.caesium.impl.CaesiumSchedulerService.executeQueuedJob(CaesiumSchedulerService.java:409) at com.atlassian.scheduler.caesium.impl.CaesiumSchedulerService$$Lambda$3337/0x0000000803244040.accept(Unknown Source) at com.atlassian.scheduler.caesium.impl.SchedulerQueueWorker.executeJob(SchedulerQueueWorker.java:66) at com.atlassian.scheduler.caesium.impl.SchedulerQueueWorker.executeNextJob(SchedulerQueueWorker.java:60) at com.atlassian.scheduler.caesium.impl.SchedulerQueueWorker.run(SchedulerQueueWorker.java:35) at java.lang.Thread.run(java.base@11.0.15/Thread.java:829)

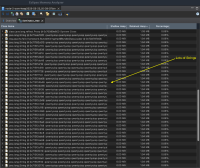

In the heap dump, a large number of String Objects can be seen:

Workaround

Workaround 1 - recover from a snapshot

- If there is one node in the Cluster that is not affected on a restart, perform the following:

- Backup and empty the <Confluence-Shared-Home>/index-snapshots (only empty out the contents)

- On the node with the working index, navigate to Confluence Administration » General Configuration » Scheduled Jobs

- Click Run on Clean Journal Entries once

- Nothing may appear to happen but the node will start zipping up the local index files to the <Shared Home>/index-snapshots. Do not click Run more than once.

- Wait until the zip file-sets are created in <Confluence-Shared-Home>/index-snapshots .e.g.

index-snapshots % ls -l total 54304 -rw------- 1 user staff 367860 22 Mar 02:14 IndexSnapshot_change_index_52942.zip -rw------- 1 user staff 5 22 Mar 02:14 IndexSnapshot_change_index_journal_id -rw------- 1 user staff 10954 22 Mar 02:14 IndexSnapshot_edge_index_28085.zip -rw------- 1 user staff 5 22 Mar 02:14 IndexSnapshot_edge_index_journal_id -rw------- 1 user staff 27410295 22 Mar 02:14 IndexSnapshot_main_index_52943.zip -rw------- 1 user staff 5 22 Mar 02:14 IndexSnapshot_main_index_journal_id

- As soon as the zip files are created in the above index-snapshots directory, on the node that fails to startup:

- Backup and clear out the <Confluence-Local-Home>/index and <Confluence-Local-Home>/journal directories

- Start up this Node

- The node will automatically recover the index files from the latest <Confluence-Shared-Home>/index-snapshots

- The node should start up without needing to perform a large amount of flushQueue memory consumption

Workaround 2 - Increase heap memory for Confluence

Depending on feasibility, an alternate workaround is to increase the java Heap Value (Xmx) to say 50 - 64GB (and Server RAM respectively) which should meet the increased memory demand during flushQueue on start up:

- On the node where Confluence is failing to start due to the above symptoms, increase the Maximum Heap to say 50g

- Start Confluence

- Confluence should have enough memory to flushQueue and form a stable Cluster

- is related to

-

CONFSERVER-78466 Incremental index causes slowness in node/cluster when a large amount of content is added while the node is offline

-

- Closed

-

-

PS-128135 Loading...

- relates to

-

CONFSERVER-83297 Confluence crashes with out of memory (OOM) error during flushQueue shortly after startup for a page with a large number of version history and unique editors

-

- Closed

-

- mentioned in

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...