-

Type:

Bug

-

Resolution: Fixed

-

Priority:

Low

-

Affects Version/s: 7.9.0, 7.13.0, 8.2.4, 8.5.4

-

Component/s: Data Center - Node replication

-

7.09

-

46

-

Severity 2 - Major

-

11

-

Summary

Asynchronous cache replication can cause extra overhead in case of large number cache updates and many stale nodes.

Environment

- Jira DC

- A large number of stale nodes (see

JRASERVER-42916) - Plugin (code) generating a large number of cache update events, eg reaching 2000 messages/min.

Steps to Reproduce

- Open a URL which produces the cache update event while computing the business logic

- Eg. #* /rest/servicedesk/1/<PRJ>/webfragments/sections/sd-queues-nav,servicedesk.agent.queues,servicedesk.agent.queues.ungrouped

- Measure response time and number of replication events

Expected Results

Performance doesn't degrade with a number of old nodes.

Actual Results

Performance degrades with a number of old stale nodes.

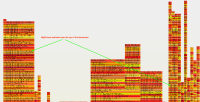

- While taking thread dumps you can see a lot of threads busy in the following stack:

java.lang.Thread.State: RUNNABLE at java.io.RandomAccessFile.writeBytes(Native Method) at java.io.RandomAccessFile.write(RandomAccessFile.java:512) at com.squareup.tape.QueueFile.writeHeader(QueueFile.java:184) at com.squareup.tape.QueueFile.add(QueueFile.java:321) - locked <0x00000003ce9b43e0> (a com.squareup.tape.QueueFile) at com.squareup.tape.FileObjectQueue.add(FileObjectQueue.java:46) at com.atlassian.jira.cluster.distribution.localq.tape.TapeLocalQCacheOpQueue.add(TapeLocalQCacheOpQueue.java:151) at com.atlassian.jira.cluster.distribution.localq.LocalQCacheOpQueueWithStats.add(LocalQCacheOpQueueWithStats.java:115) at com.atlassian.jira.cluster.distribution.localq.LocalQCacheManager.addToQueue(LocalQCacheManager.java:370) at com.atlassian.jira.cluster.distribution.localq.LocalQCacheManager.addToAllQueues(LocalQCacheManager.java:354) at com.atlassian.jira.cluster.distribution.localq.LocalQCacheReplicator.replicateToQueue(LocalQCacheReplicator.java:85) at com.atlassian.jira.cluster.distribution.localq.LocalQCacheReplicator.replicatePutNotification(LocalQCacheReplicator.java:65) at com.atlassian.jira.cluster.cache.ehcache.AbstractJiraCacheReplicator.notifyElementUpdated(AbstractJiraCacheReplicator.java:123) at net.sf.ehcache.event.RegisteredEventListeners.internalNotifyElementUpdated(RegisteredEventListeners.java:228) at net.sf.ehcache.event.RegisteredEventListeners.notifyElementUpdated(RegisteredEventListeners.java:206) ...

- From client's case, we saw 15 - 20% of all threads doing replicateToQueue

Notes

None

Workaround

Clean-up old node data manually, see JRASERVER-42916

- is related to

-

JRASERVER-65538 Active nodes query for offline node messages and index operations

-

- Closed

-

-

JRASERVER-42916 Stale node ids should automatically be removed in Jira Data Center

- Closed

-

JRASERVER-67019 Asynchronous cache replication in Jira Data Center

- Closed

- relates to

-

JSDSERVER-6490 Opening Service Desk Queue will send many Cache replication requests for Queue Count

-

- Closed

-

- mentioned in

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...