-

Type:

Bug

-

Resolution: Fixed

-

Priority:

Medium

-

Affects Version/s: 5.10.8, 6.6.5

-

Component/s: Server - Performance

-

11

-

Severity 1 - Critical

-

16

-

Problem Summary

Performance bottlenecks can be triggered by Label related queries on large Confluence instances.

Problem Details

Confluence provides users with a handful of Label related macros. These macros can be used to find the most popular labels in a space, find recently used macros, to get a comprehensive report of pages that have specific labels and so goes on.

The main action behind such functionalities consists of the application going to the database to retrieve a list of labels based on the input provided by the macros. This is exactly where the problem happens, given the nature of the queries. Symptoms observed have been:

- Overall application slowness.

- Increased response time on the database size due to what seems to be a huge amount of data returned by the Label queries.

- Stuck threads waiting to interact with the database - This happens because the Label related threads make the database busy.

- Need to restart the application to make it usable once again.

Thread Dumps

Thread Dumps generated during situations like the above mentioned one show the application stuck for long time executing an Hibernate Statement in the database. The statement comes from the findMostPopular method:

"http-nio2-8443-exec-277" #512 daemon prio=5 os_prio=0 tid=0x00007f354c055800 nid=0x389c runnable [0x00007f323d893000] java.lang.Thread.State: RUNNABLE at java.net.SocketInputStream.socketRead0(Native Method) at java.net.SocketInputStream.socketRead(SocketInputStream.java:116) at java.net.SocketInputStream.read(SocketInputStream.java:171) at java.net.SocketInputStream.read(SocketInputStream.java:141) at sun.security.ssl.InputRecord.readFully(InputRecord.java:465) at sun.security.ssl.InputRecord.read(InputRecord.java:503) at sun.security.ssl.SSLSocketImpl.readRecord(SSLSocketImpl.java:983) - locked <0x00000005dc024f38> (a java.lang.Object) at sun.security.ssl.SSLSocketImpl.readDataRecord(SSLSocketImpl.java:940) at sun.security.ssl.AppInputStream.read(AppInputStream.java:105) - locked <0x00000005dc024ff8> (a sun.security.ssl.AppInputStream) at com.mysql.jdbc.MysqlIO.readFully(MysqlIO.java:3008) at com.mysql.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:3469) at com.mysql.jdbc.MysqlIO.reuseAndReadPacket(MysqlIO.java:3459) at com.mysql.jdbc.MysqlIO.checkErrorPacket(MysqlIO.java:3900) at com.mysql.jdbc.MysqlIO.sendCommand(MysqlIO.java:2527) at com.mysql.jdbc.MysqlIO.sqlQueryDirect(MysqlIO.java:2680) at com.mysql.jdbc.ConnectionImpl.execSQL(ConnectionImpl.java:2487) - locked <0x00000005dc025160> (a com.mysql.jdbc.JDBC4Connection) at com.mysql.jdbc.PreparedStatement.executeInternal(PreparedStatement.java:1858) - locked <0x00000005dc025160> (a com.mysql.jdbc.JDBC4Connection) at com.mysql.jdbc.PreparedStatement.executeQuery(PreparedStatement.java:1966) - locked <0x00000005dc025160> (a com.mysql.jdbc.JDBC4Connection) at com.mchange.v2.c3p0.impl.NewProxyPreparedStatement.executeQuery(NewProxyPreparedStatement.java:379) at net.sf.hibernate.impl.BatcherImpl.getResultSet(BatcherImpl.java:89) at net.sf.hibernate.loader.Loader.getResultSet(Loader.java:880) at net.sf.hibernate.loader.Loader.doQuery(Loader.java:273) at net.sf.hibernate.loader.Loader.doQueryAndInitializeNonLazyCollections(Loader.java:138) at net.sf.hibernate.loader.Loader.doList(Loader.java:1063) at net.sf.hibernate.loader.Loader.list(Loader.java:1054) at net.sf.hibernate.hql.QueryTranslator.list(QueryTranslator.java:854) at net.sf.hibernate.impl.SessionImpl.find(SessionImpl.java:1570) at net.sf.hibernate.impl.QueryImpl.list(QueryImpl.java:49) at com.atlassian.hibernate.HibernateObjectDao.lambda$findNamedQueryStringParams$5(HibernateObjectDao.java:432) at com.atlassian.hibernate.HibernateObjectDao$$Lambda$479/1754201883.doInHibernate(Unknown Source) at org.springframework.orm.hibernate.HibernateTemplate.execute(HibernateTemplate.java:370) at org.springframework.orm.hibernate.HibernateTemplate.executeFind(HibernateTemplate.java:341) at com.atlassian.hibernate.HibernateObjectDao.findNamedQueryStringParams(HibernateObjectDao.java:408) at com.atlassian.hibernate.HibernateObjectDao.findNamedQueryStringParam(HibernateObjectDao.java:308) at com.atlassian.confluence.labels.persistence.dao.hibernate.HibernateLabelDao.performNonMySqlMostPopular(HibernateLabelDao.java:352) at com.atlassian.confluence.labels.persistence.dao.hibernate.HibernateLabelDao.findMostPopular(HibernateLabelDao.java:345) at com.atlassian.confluence.labels.DefaultLabelManager.getMostPopularLabels(DefaultLabelManager.java:571) at com.atlassian.confluence.labels.CachingLabelManager.lambda$getMostPopularLabels$0(CachingLabelManager.java:219) at com.atlassian.confluence.labels.CachingLabelManager$$Lambda$1442/1161219258.apply(Unknown Source) at com.atlassian.confluence.internal.labels.MostPopularCache.valueWrapper(MostPopularCache.java:51) at com.atlassian.confluence.internal.labels.MostPopularCache.lambda$getMostPopularGlobal$0(MostPopularCache.java:39) at com.atlassian.confluence.internal.labels.MostPopularCache$$Lambda$1445/643250503.get(Unknown Source)

Database Logs

As far as the database logging goes, there are queries that correlates with the above thread dump (they are triggered by findMostPopular method) and that takes a long time to be executed - Usually something in between 5 to 10 minutes:

SELECT * FROM ( SELECT label1_.LABELID AS col_0_0_, CAST (COUNT (labelling0_.LABELID) AS NUMBER (10, 0)) AS col_1_0_, label1_.LABELID AS LABELID1_43_, label1_.NAME AS NAME2_43_, label1_.OWNER AS OWNER3_43_, label1_.NAMESPACE AS NAMESPACE4_43_, label1_.CREATIONDATE AS CREATIONDATE5_43_, label1_.LASTMODDATE AS LASTMODDATE6_43_ FROM CONTENT_LABEL labelling0_, LABEL label1_, CONTENT contentent2_ WHERE labelling0_.LABELID = label1_.LABELID AND labelling0_.CONTENTID = contentent2_.CONTENTID AND label1_.NAMESPACE = :1 AND contentent2_.CONTENT_STATUS <> 'deleted' GROUP BY label1_.LABELID, label1_.NAMESPACE, label1_.OWNER, label1_.NAME, label1_.LASTMODDATE, label1_.CREATIONDATE ORDER BY CAST (COUNT (labelling0_.LABELID) AS NUMBER (10, 0)) DESC) WHERE ROWNUM <= :2

Another example would be:

select labelling0_.ID as ID, labelling0_.LABELID as LABELID, labelling0_.CONTENTID as CONTENTID, labelling0_.PAGETEMPLATEID as PAGETEMP4_, labelling0_.OWNER as OWNER, labelling0_.CREATIONDATE as CREATION6_, labelling0_.LASTMODDATE as LASTMODD7_, labelling0_.LABELABLEID as LABELABL8_, labelling0_.LABELABLETYPE as LABELABL9_ from CONTENT_LABEL labelling0_, LABEL label1_, CONTENT contentent2_ where (labelling0_.LABELID=label1_.LABELID )and(label1_.NAMESPACE='global' )and(contentent2_.CONTENT_STATUS!='deleted' and labelling0_.CONTENTID=contentent2_.CONTENTID) order by labelling0_.LASTMODDATE

Also, the following query represents yet another example:

SELECT * FROM ( SELECT labelling0_.ID AS ID1_13_ , labelling0_.LABELID AS LABELID2_13_ , labelling0_.CONTENTID AS CONTENTID3_13_ , labelling0_.PAGETEMPLATEID AS PAGETEMPLATEID4_13_, labelling0_.OWNER AS OWNER5_13_ , labelling0_.CREATIONDATE AS CREATIONDATE6_13_ , labelling0_.LASTMODDATE AS LASTMODDATE7_13_ , labelling0_.LABELABLEID AS LABELABLEID8_13_ , labelling0_.LABELABLETYPE AS LABELABLETYPE9_13_ FROM CONTENT_LABEL labelling0_, LABEL label1_ , CONTENT contentent2_ WHERE labelling0_.CONTENTID =contentent2_.CONTENTID AND labelling0_.LABELID =label1_.LABELID AND label1_.NAMESPACE ='global' AND contentent2_.CONTENT_STATUS<>'deleted' ORDER BY labelling0_.LASTMODDATE DESC ) WHERE rownum <= :1

Suggested Root Cause

Depending on the size of the Content Table, above queries can be quite expensive and take a long time to run. To know the size of one's instance, run the following query:

select content_status, count(*) from content group by content_status;

Tests show that instances with 15M entries in the Content table can take up to 90s to have the above queries returning results, regardless of indexes. Therefore, the problem is related to the scalability of the Label logic in Confluence.

Which action can trigger such queries?

- Through a call to /labels/autocompletelabel.action - This is a call to retrieve a list of labels to auto complete an user input when one is adding labels to a page. This call will only jeopardise the application health if there's no Contend ID in the URL - For example, when adding labels to an unpublished page where Content ID is still not referenced in the URL.

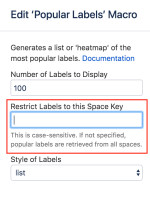

- When using the Popular Labels Macro without a Space Key defined on it:

Workaround

There are a few things we can do to mitigate the impact of this problem:

- To block calls to _/labels/autocompletelabel.action_ in a Proxy/Tomcat level. Downside is that auto-completion of labels won't work.

- To enforce users to define a Space Key when adding the Popular Labels Macro.

Extra:

In order to know which pages have the Popular Labels Macro without a Space Key set to it, one can run the query below - It has been tested in PostgreSQL and there may be a need to tweak it a little bit for other database flavours:

select c.title, c.contentid, s.spacekey from content c, spaces s where contentid in ( select contentid from bodycontent where body like '%ac:name="popular-labels"%' and body not ilike'%<ac:parameter ac:name="spaceKey">%') and c.prevver is null and c.spaceid = s.spaceid;

With the above list in hands, one can go to each page and edit the Macro to have a Space Key on it to workaround the performance issue.

- is related to

-

CONFSERVER-22726 Improve performance of suggest labels functionality for large instances

-

- Closed

-

- relates to

-

CONFSERVER-60137 Most popular label macro: enable caching

- Closed

-

PSR-263 Loading...

- mentioned in

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...

-

Page Loading...