-

Type:

Bug

-

Resolution: Fixed

-

Priority:

Medium

-

Component/s: User - SSH Keys

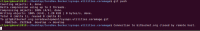

I have a cron job that pulls from a bitbucket repository once an hour. Every so often, the cron job emails me

ssh_exchange_identification: Connection closed by remote host fatal: The remote end hung up unexpectedly

This started a few weeks ago with this happening perhaps once every few days. Now it's getting worse, happening almost every hour.

To check, I set up the same cron job on a completely separate host, and it's showing the same behavior. So I suspect the problem is on the server's end.