-

Bug

-

Resolution: Fixed

-

Low

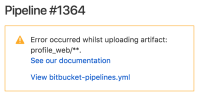

Bitbucket pipeline is often failing because of a "System error" saying "Error occurred whilst uploading artifact":

Here is the bitbucket-pipeline section of the step where the System error occurred:

- step:

name: Generate APIBlueprint documentation

image:

name: <custom docker image hosted on aws ecr>

aws:

access-key: $AWS_ACCESS_KEY_ID

secret-key: $AWS_SECRET_ACCESS_KEY

script:

- <generate documentation.apib file>

- cp documentation.apib dist/documentation.apib

artifacts:

- dist/documentation.apib

Re-running the pipeline usually solves the problem, letting me think it's not an issue in our bitbucket-pipeline.yml file.

Attachment 543547393-Capture%20d%E2%80%99%C3%A9cran%202017-10-20%20%C3%A0%2010.59.31.png has been added with description: Originally embedded in Bitbucket issue #15062 in site/master