-

Type:

Bug

-

Resolution: Fixed

-

Priority:

Highest

-

Affects Version/s: 2.10.2

-

Component/s: None

-

Environment:

jdk6u12 (32bit and 64bit), mysql5, war, cluster - but there is only one node in the cluster

In the last several weeks we've been seeing a lot of confluence instabilities at wikis.sun.com - all of them were related to running out of heap space. Several iterations of increasing Xmx didn't help (we started at 3GB and now we are at 5GB and 64bit JVM).

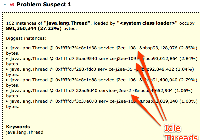

I took several memory dumps during outages and analyzed them with Eclipse Memory Analyzer, which repeatedly found two issues:

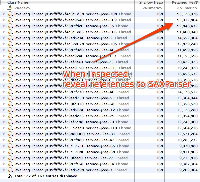

- Something is storing Xerces SaxParser objects as ThreadLocal variables, this results in up to 90MB being retained per thread and I see several instances of this size being held in memory causing total of 800-1200MB of the memory to be retained

- Hundreds of instances of net.sf.hibernate.impl.SessionImpl retain additional ~780MB of memory - I'll document this as a separate issue

Just before taking the heap dump, I also took a thread dump. By comparing the two I found that threads that were holding on the the huge thread local variables were currently in the containers thread pool and were not processing any requests - thus should have minimal memory requirements.

I'm attaching some annotated screenshots from Eclipse Memory Analyzer and a thread dump that proves that the misbehaving threads were idle.